ManuelAlonso

Aperture

Revitalizing a photo-sharing platform by leveraging user research, prioritization frameworks, and iterative design to improve customization features and user retention.

Introduction

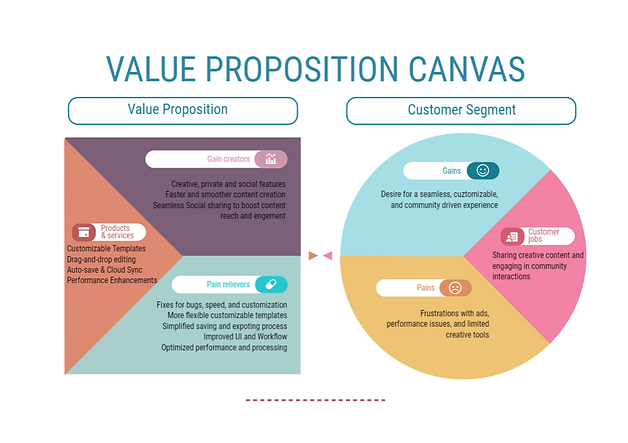

Aperture is a photo-sharing platform that generates revenue through advertising. However, engagement had declined, affecting ad revenue. To address this, I worked on improving customization features to increase user retention and satisfaction.

The Scope

This capstone project is a case study from my Product Management Certification Course with Careerfoundry.

The goal was to enhance customization features while balancing user needs, technical feasibility, and business objectives. I focused on user research, feature prioritization, and iterative testing to create a product roadmap that aligned with Aperture’s growth strategy.

Project Scale: May-December 2024

Skills/Methodologies: Agile, Stakeholder Management, User and Market Research, Prioritization Frameworks (MoSCoW, RICE, Effort/Impact), wireframing, prototyping, user testing (A/B Testing).

Tools used: Miro, Figma, Confluence, G Suite, Canva, Loom, Jira, and Slack.

The Challenge

Aperture, a photo-sharing app, faced a decline in active users, impacting ad revenue and long-term sustainability. The core audience (18-24-year-olds) disengaged due to rigid templates and outdated workflows, and migrating over to competitors, which offered greater flexibility and creativity.

Key Findings:

-

85% of surveyed users were frustrated by limited customization.

-

30% increase in unfinished projects was linked to confusing save mechanics.

-

Competitor analysis showed that rival platforms provided more intuitive workflows.

To stay competitive, Aperture needed to modernize content creation, simplify editing, and expand customization options—or risk losing its audience entirely.

Product Phases

Phase 1: Defining the Problem

Aperture’s user engagement was declining, and the research pointed to a lack of customization and frustrating workflows as the main culprits. 85% of surveyed users reported that they wanted greater control over design elements, but Aperture’s rigid templates didn’t allow them to fully personalize their content. Additionally, confusing saving mechanics led to frequent abandoned projects.

To validate these insights, I conducted a competitive analysis. Findings showed that platforms like Canva and Adobe Express offered intuitive customization features and stronger social-sharing tools, making them more engaging for younger users. If Aperture wanted to retain its audience and attract new users, it needed to match and surpass these experiences.

Phase 2: User Research

To refine the approach, I engaged in user-centric research that included 10 in-depth user interviews, 20 survey responses, and heatmap analysis of existing user behavior. The results were clear: users wanted a seamless, highly flexible editing process that mirrored the ease of industry leaders.

A significant pain point was rigid templates restricting creativity, which 85% of users flagged as a major limitation. Additionally, session recordings revealed frequent drop-offs due to poor save functionality and a lack of intuitive design tools. The research confirmed that customization, simplified workflows, and an effortless save process would be essential for improving engagement and retention.

Phase 3: Ideation

Using MoSCoW prioritization, RICE scoring, and an Effort-Impact matrix, I identified high-impact, feasible features. The focus was on customizable templates, improved drag-and-drop controls, and a simplified save system. Prioritization ensured that development efforts aligned with user needs while balancing feasibility constraints.

Effort-Impact Analysis

To refine our prioritization further, we plotted each feature on an Effort-Impact Matrix, ensuring a balance between feasibility and impact:

-

Quick Wins (High Impact, Low Effort): Customizable Templates, Auto-Save & Cloud Sync, Drag-and-Drop Editing.

-

Major Projects (High Impact, High Effort): Rollback Feature to Revert Updates.

-

Fill-Ins (Low Impact, Low Effort): Automated Bug Detection System.

-

Thankless Tasks (Low Impact, High Effort): User Feedback Portal for Beta Testing.

This structured approach ensured we focused on features that delivered maximum value with minimal complexity, forming the foundation for the Minimum Viable Product (MVP). The prioritization process also enabled better resource allocation, allowing us to address key pain points efficiently while keeping the development timeline realistic.

Phase 4: Wireframes & Prototyping

To test the new design direction, we built wireframes and prototypes, refining the user journey to be intuitive and efficient. The process included:

-

Lo-Fi Wireframes outlined primary interactions and layout structure.

-

Mid-Fi Prototypes developed in Figma, allowing us to test early functionality.

User Testing Insights

After testing, I saw clear improvements:

-

90% of users found drag-and-drop significantly easier than previous customization workflows.

-

Auto-save reduced frustration, ensuring users no longer lost work unintentionally.

-

Template customization was the most highly valued feature, proving its necessity for engagement.

Phase 5: User testing, Iterations & MVP

To fine-tune the feature set, I conducted A/B testing comparing different customization experiences:

-

Prototype A: Standard template selection with limited flexibility.

-

Prototype B: Fully customizable templates with drag-and-drop functionality.

Results & Iterations

Our findings led to clear product decisions:

-

Prototype B improved task completion by 25%, demonstrating its usability advantages.

-

Users spent 40% less time completing edits, indicating improved efficiency.

-

80% of users engaged with drag-and-drop features, confirming demand.

These insights shaped the final MVP, ensuring a balance between simplicity, usability, and feature richness.

Phase 6: Launch & Tracking Metrics

I developed a staggered launch plan that focused on an initial soft-launch and then a full-scale launch. This required strong cross-functional collaboration, coordination, ensuring alignment between stakeholders, objectives, logistics, budget, marketing strategy, and risk mitigation planning.

Given the need to validate the impact of the new features while working within resource constraints, the staggered launch strategy allowed us to gather user feedback during the soft-launch before committing to a full-scale release. The rationale behind this approach was to test engagement levels, assess feature adoption, and address any usability concerns early. This staggered rollout helped ensure that improvements were made in real time, leading to a smoother transition for a larger audience.

Product Monitoring

To maintain post-launch stability, I would track performance issues like load delays and crashes while gathering feedback through in-app surveys, direct client communication, and support channels.

Launch Metrics

Key success metrics would focus on feature adoption, session duration, and retention, aiming for 60% adoption in the first month and 70% weekly user retention to measure long-term engagement.

Final Learnings & Reflection

This case study provided hands-on experience in user research, feature prioritization, and iterative testing. The biggest takeaway was the importance of data-driven decision-making—using structured frameworks like RICE scoring and A/B testing to guide product decisions. My mentor highlighted my ability to synthesize complex data, create structured prioritization models, and clearly articulate decision-making processes—all critical PM skills.

One challenge was balancing user needs with technical feasibility—breaking features into actionable, iterative steps required thoughtful planning. Another key learning was stakeholder communication, ensuring alignment across engineering, design, and business teams.

Next Steps

Moving forward, I aim to apply these learnings to real-world product challenges, improving my ability to translate research into feature development, manage trade-offs, and align stakeholders around a shared vision. This project reinforced how user insights, iterative testing, and business objectives must align to create successful products.